Abstract

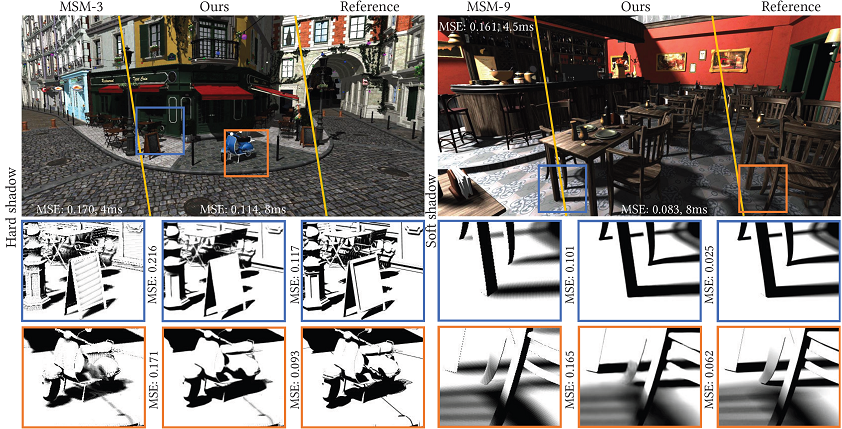

We present a neural extension of basic shadow mapping for fast, high quality hard and soft shadows. We compare favorably to fast pre-filtering shadow mapping, all while producing visual results on par with ray traced hard and soft shadows. We show that combining memory bandwidth-aware architecture specialization and careful temporal-window training leads to a fast, compact and easy-to-train neural shadowing method. Our technique is memory bandwidth conscious, eliminates the need for post-process temporal anti-aliasing or denoising, and supports scenes with dynamic view, emitters and geometry while remaining robust to unseen objects.

Comparision

Pre-recorded presentation

Downloads

Paper: neuralShadowMapping.pdf (3.5MB)

Supplemental: neuralShadowMappingSupplemental.pdf (2.3MB)

Video results: gDrive (MP4, 902MB)

Bibtex: nsm.bib

Acknowledgements

We thank the reviewers for their constructive feedback, the ORCA for the Amazon Lumberyard Bistro model, the Stanford CG Lab for the Bunny, Buddha, and Dragon models, Marko Dabrovic for the Sponza model and Morgan McGuire for the Bistro, Conference and Living Room models. This work was done when Sayantan was an intern at Meta Reality Labs Research. While at McGill University, he was also supported by a Ph.D. scholarship from the Fonds de recherche du Québec – nature et technologies.