Applications of Differentiable indirection across graphics pipeline. From left to right, texture compression, SDF representation, texture filtering and parametric shading, and radiance field compression. Go to Downloads.

Abstract

We introduce differentiable indirection – a novel learned primitive that employs differentiable multi-scale lookup tables as an effective substitute for traditional compute and data operations across the graphics pipeline. We demonstrate its flexibility on a number of graphics tasks, i.e., geometric and image representation, texture mapping, shading, and radiance field representation. In all cases, differentiable indirection seamlessly integrates into existing architectures, trains rapidly, and yields both versatile and efficient results.

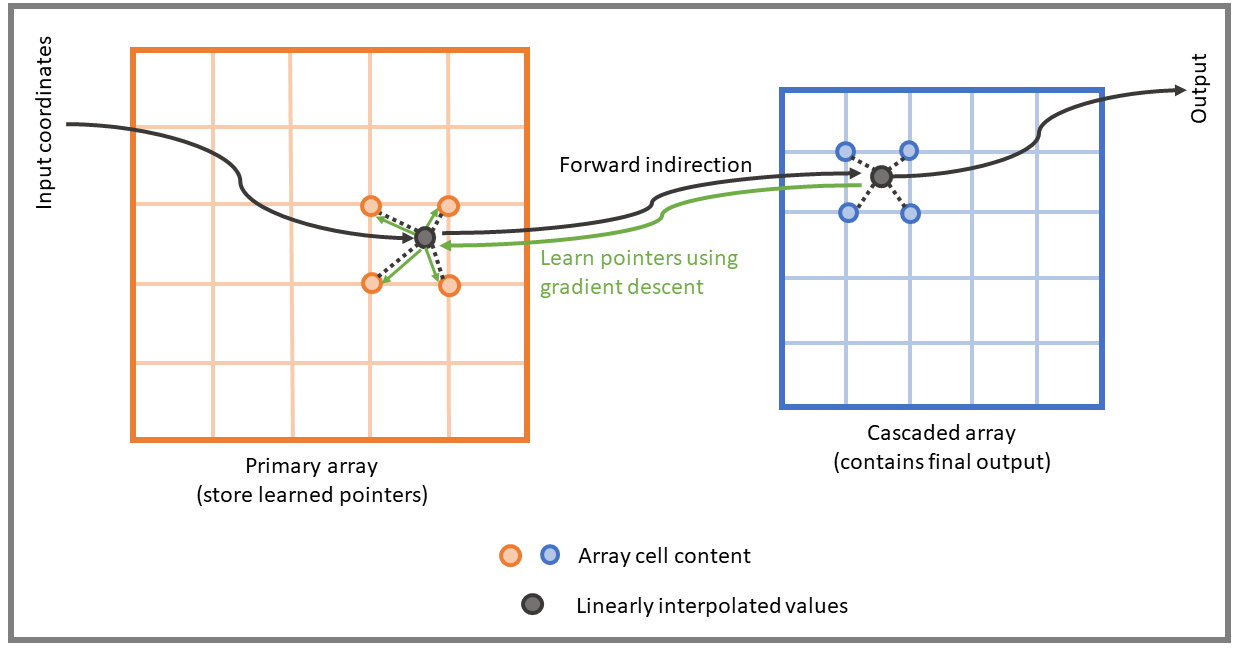

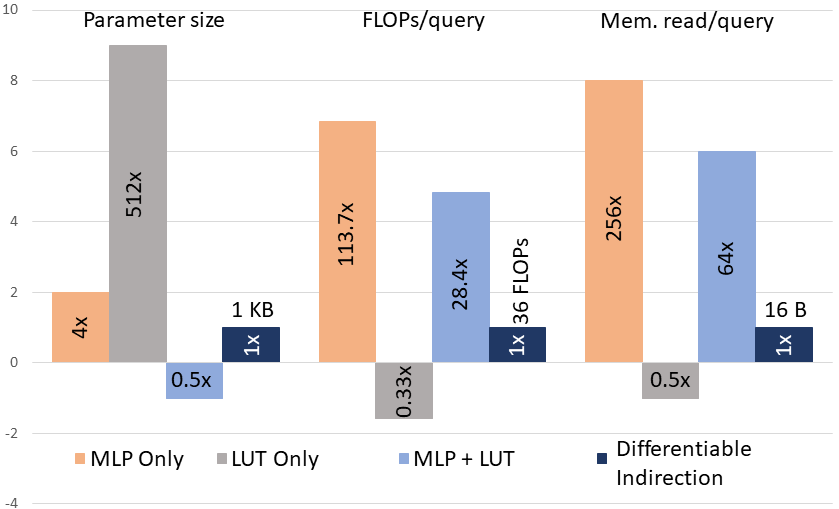

What is differentiable indirection?

Why use differentiable indirection?

Paper presentation

Video results

Results & comparision

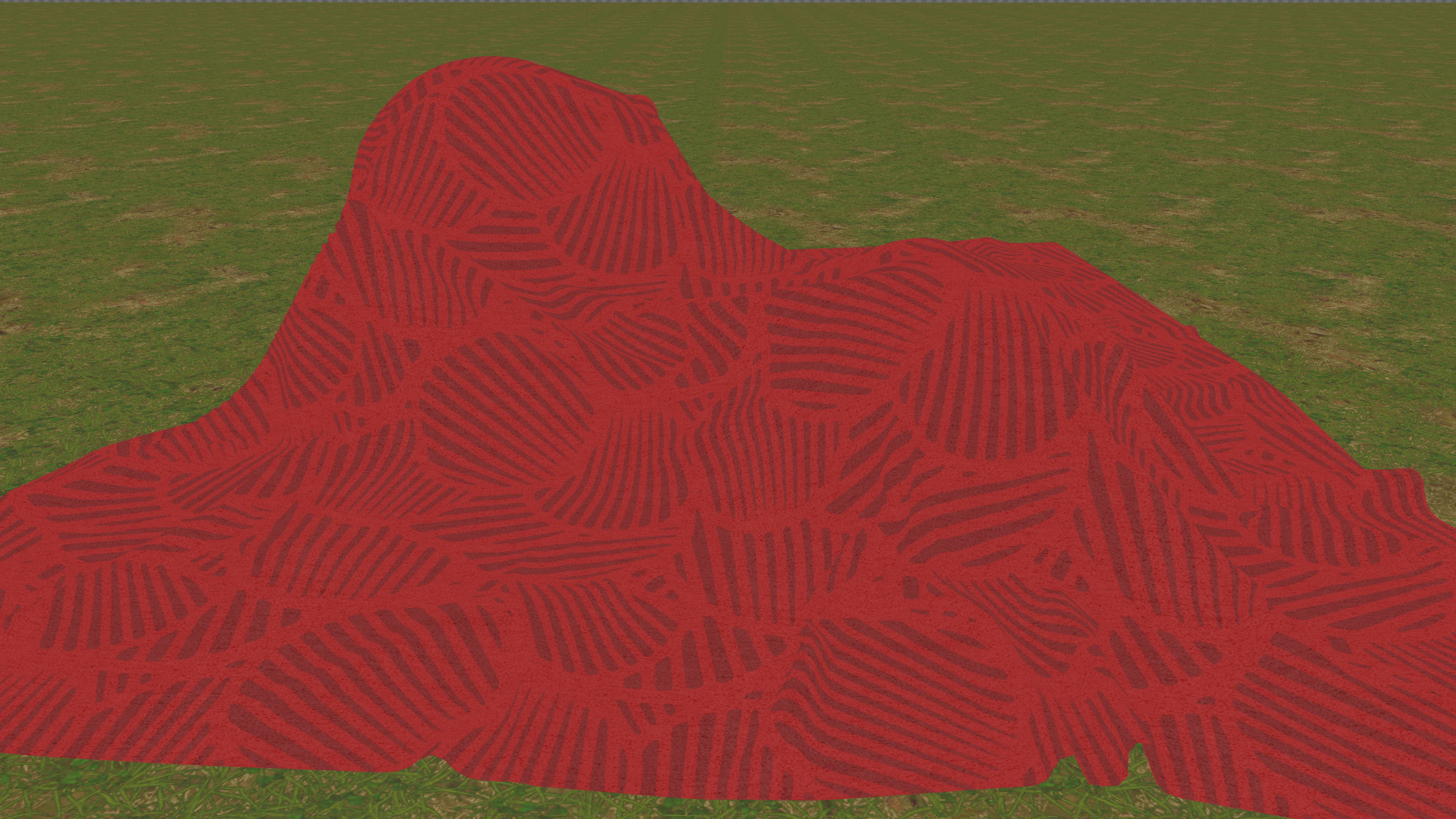

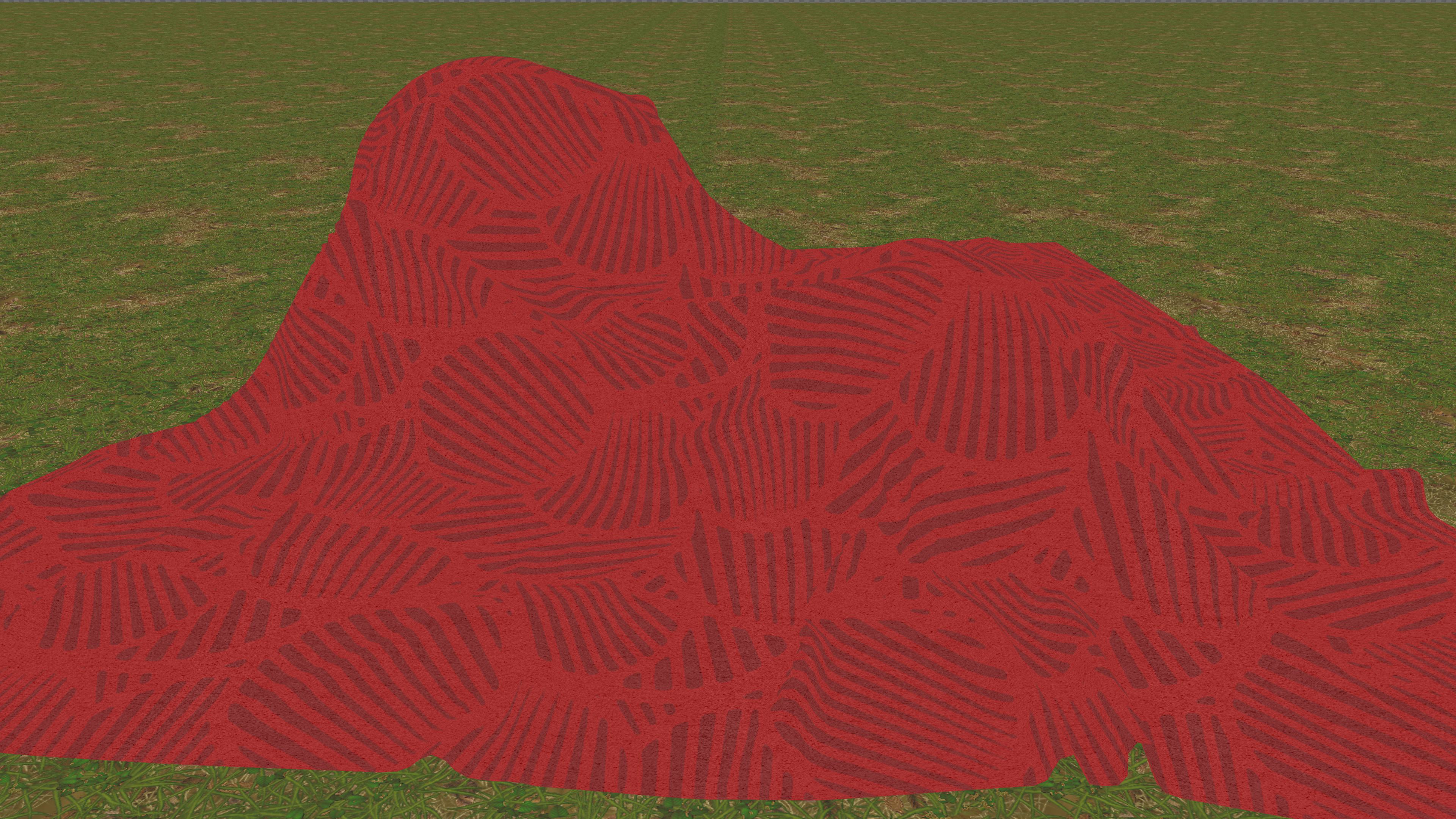

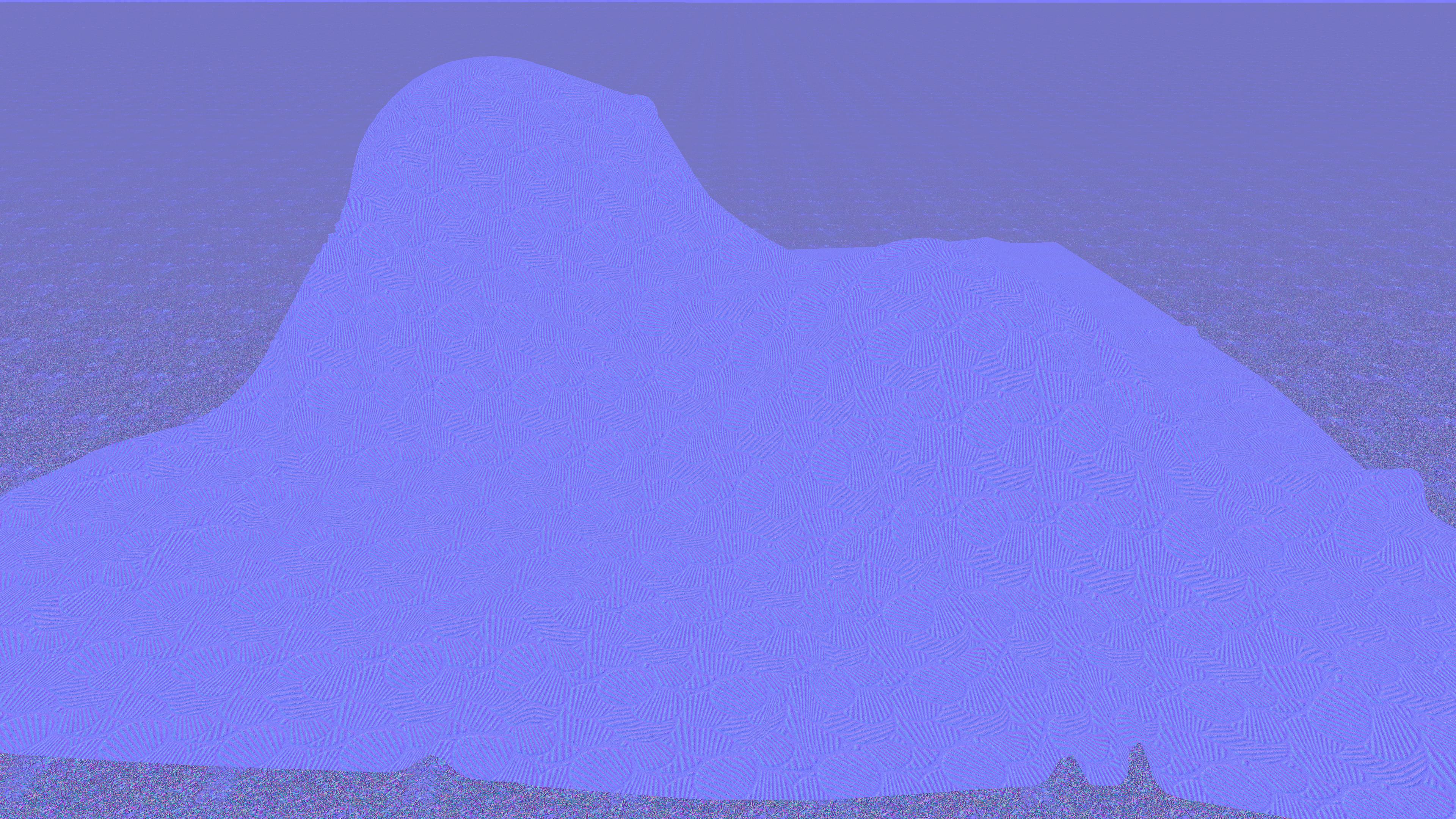

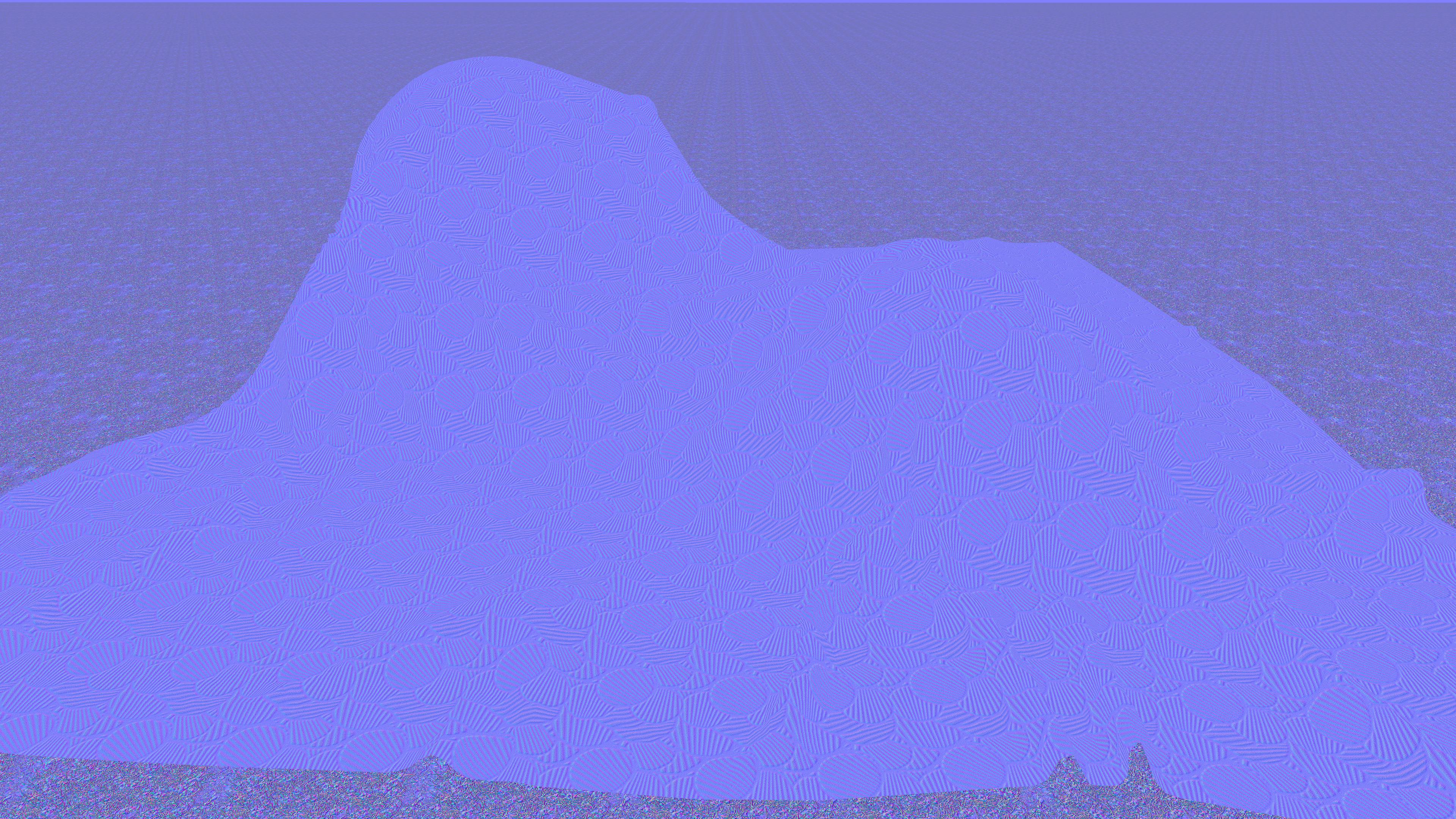

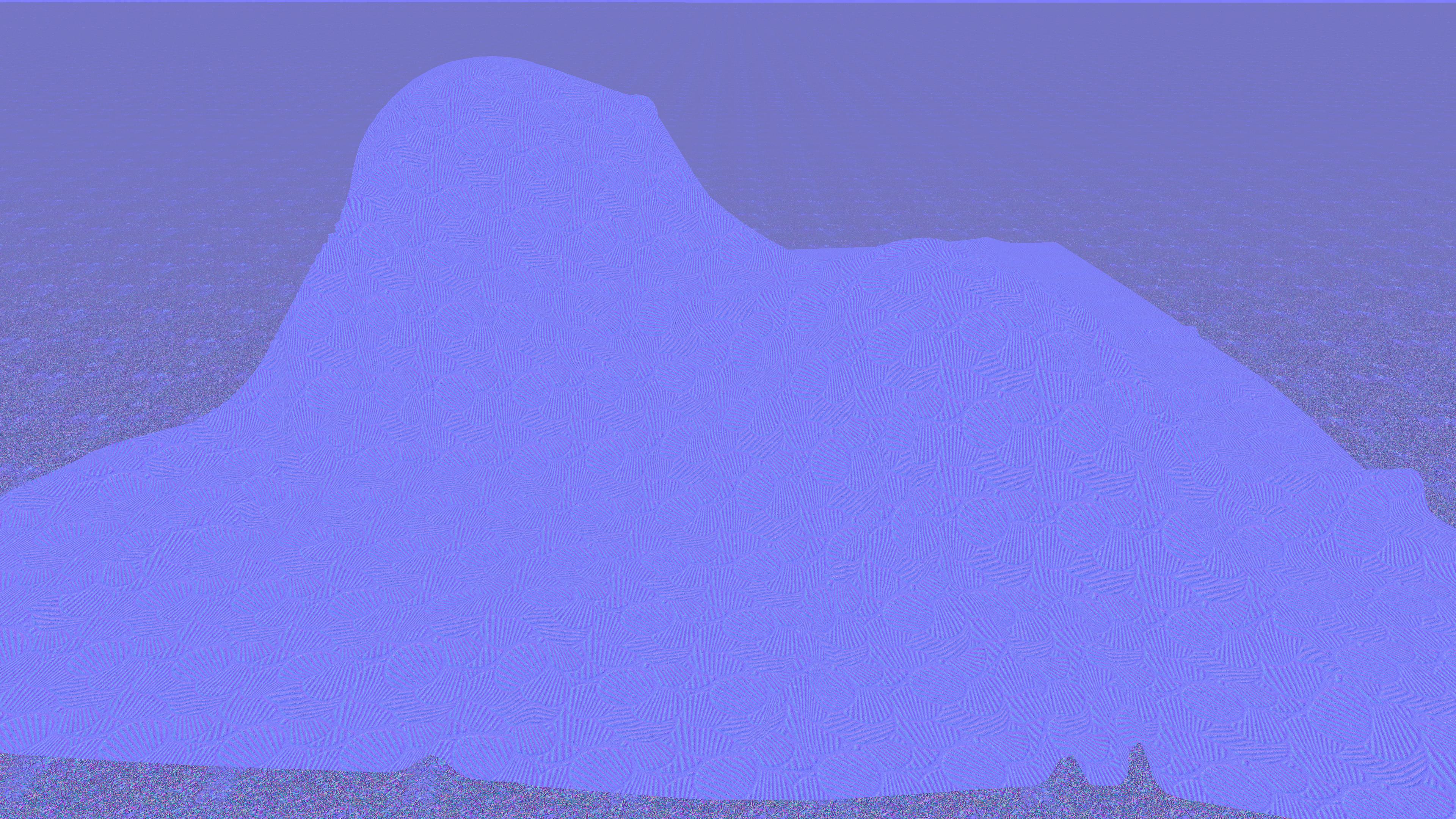

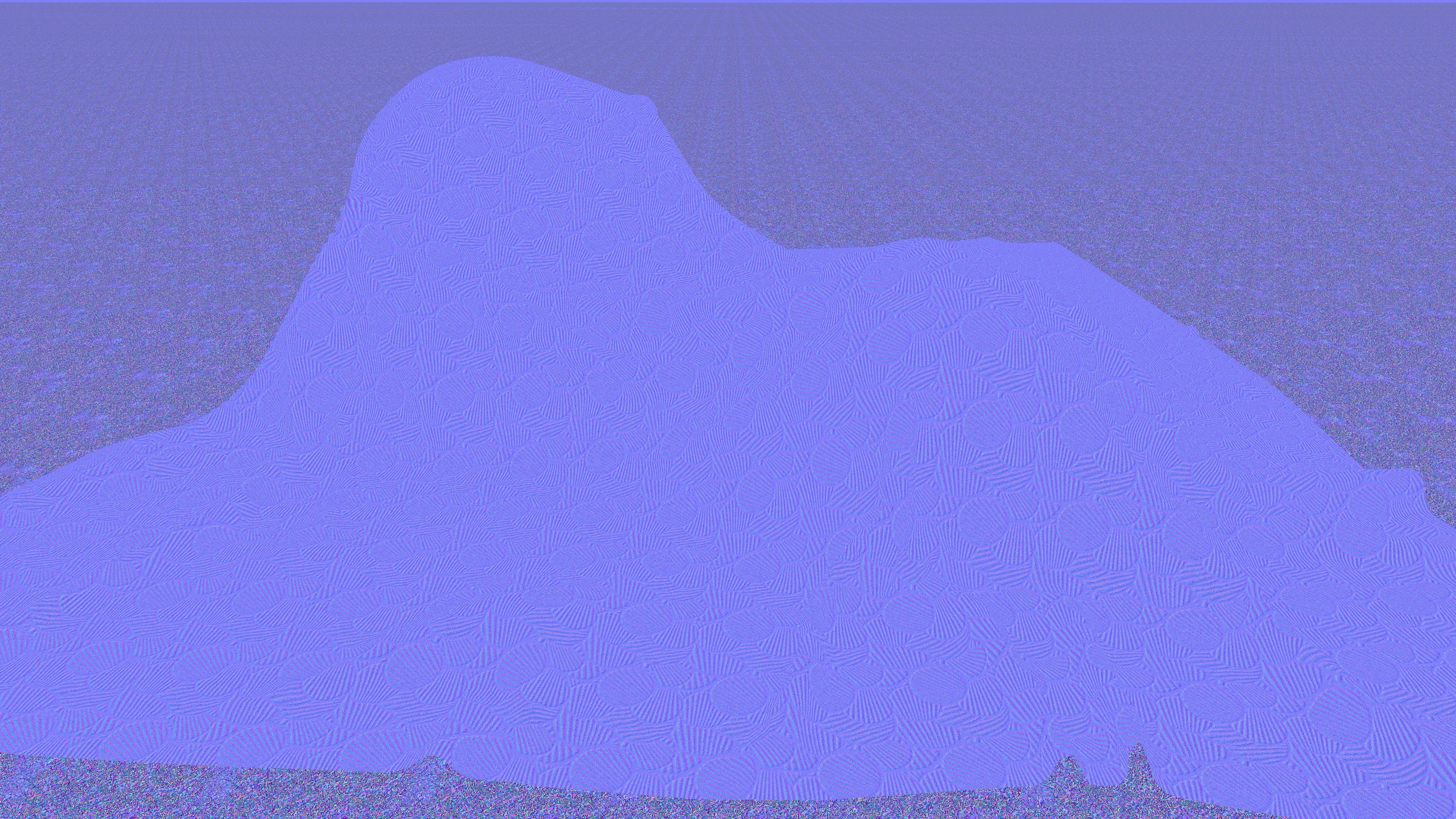

SDF representation using differentiable indirection compared with reference Kd-Tree with 1B surface sample points. SDF to mesh reconstruction for visualization using marching-cubes algorithm.

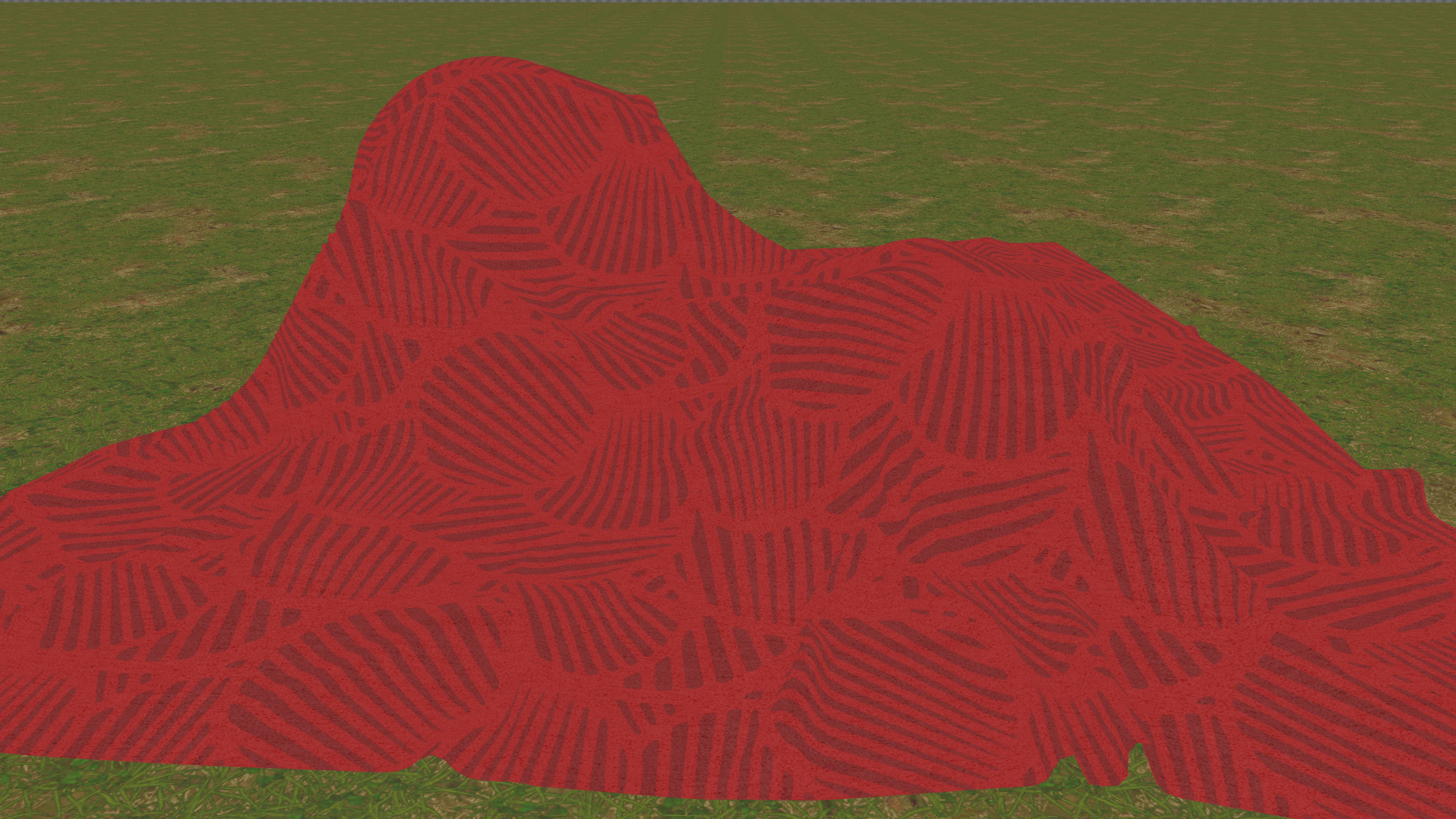

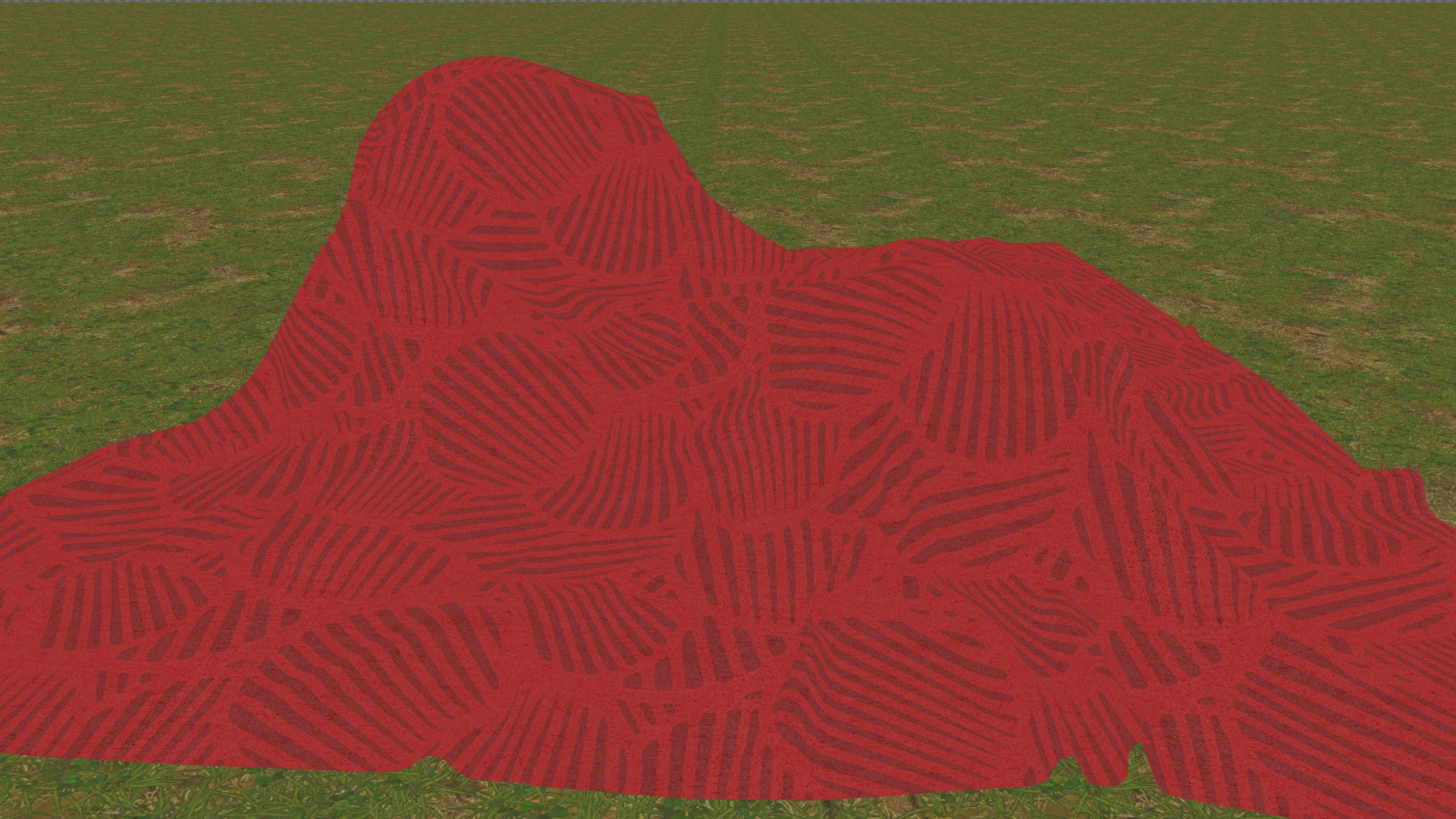

SDF representation using differentiable indirection compared with MRHE with 8-Levels (6 Desne + 2 Hash) + MLP (4-hidden layer 16 wide). SDF to mesh reconstruction for visualization using marching-cubes algorithm.

6x image compression using differentiable indirection compared with uncompressed 3K (1K x 3) reference.

12x image compression using differentiable indirection compared with uncompressed 3K (1K x 3) reference.

24x image compression using differentiable indirection compared with uncompressed 3K (1K x 3) reference.

48x image compression using differentiable indirection compared with uncompressed 3K (1K x 3) reference.

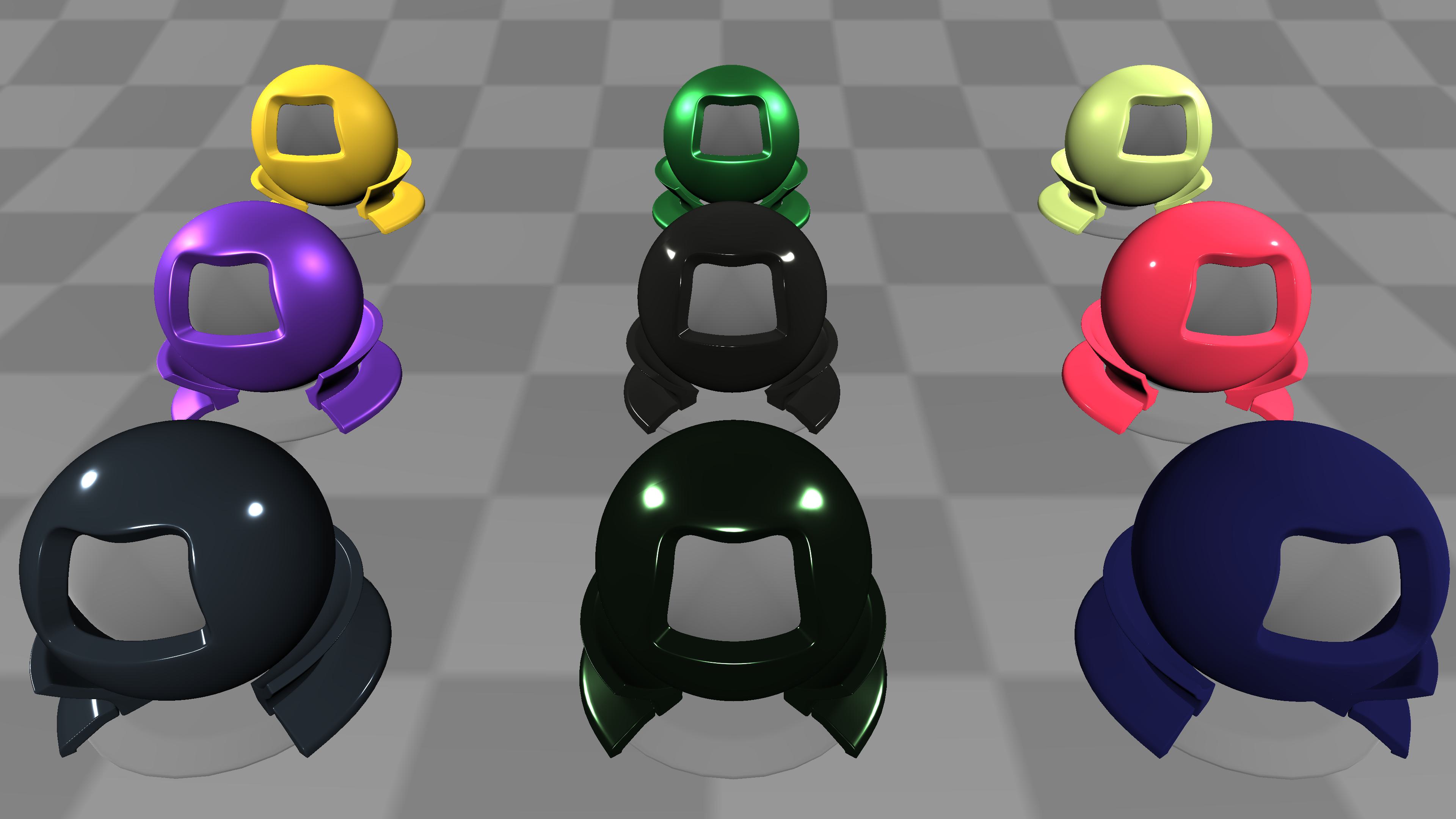

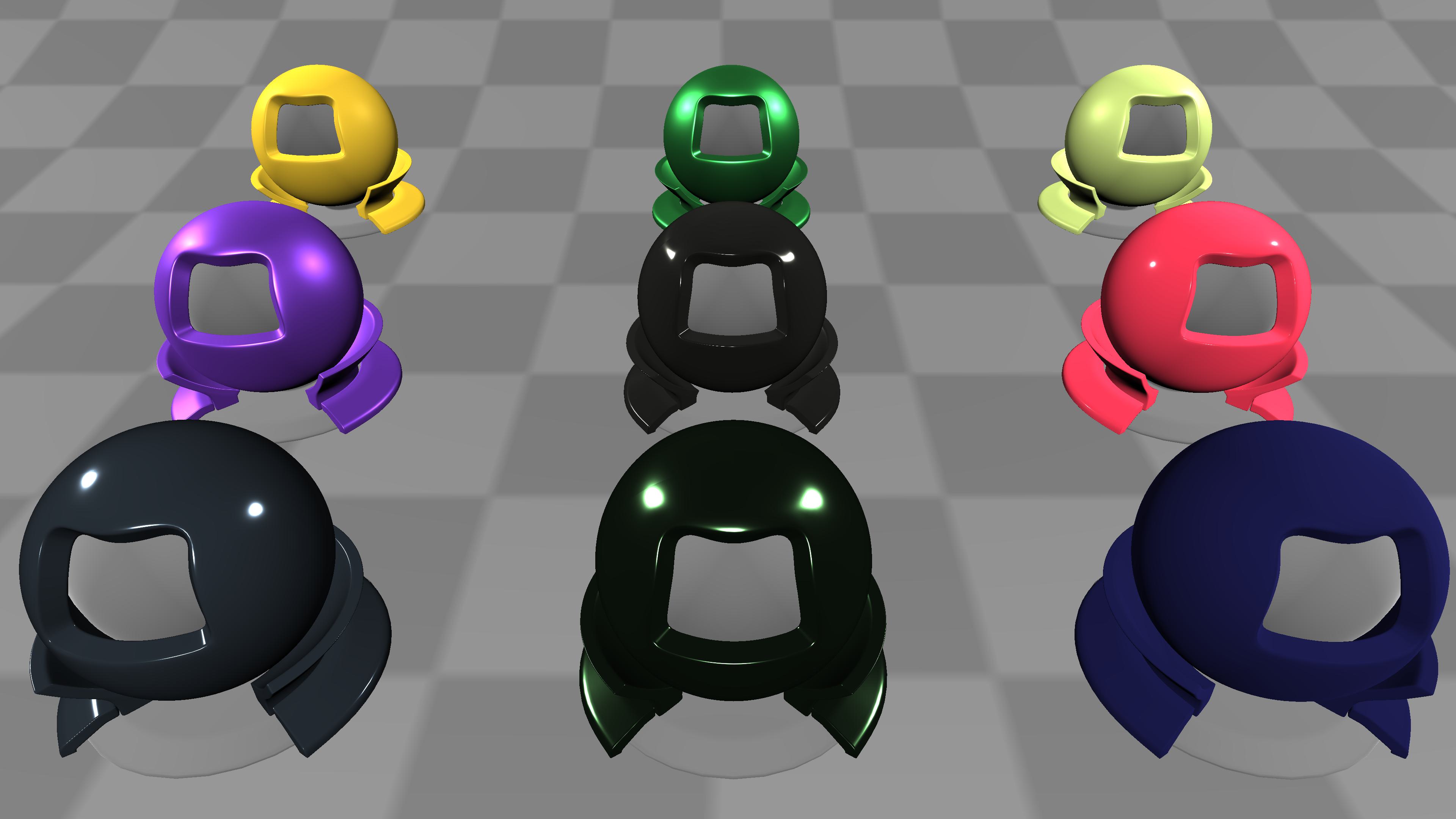

Disney BRDF approximation using differentiable indirection compared with analytic reference.

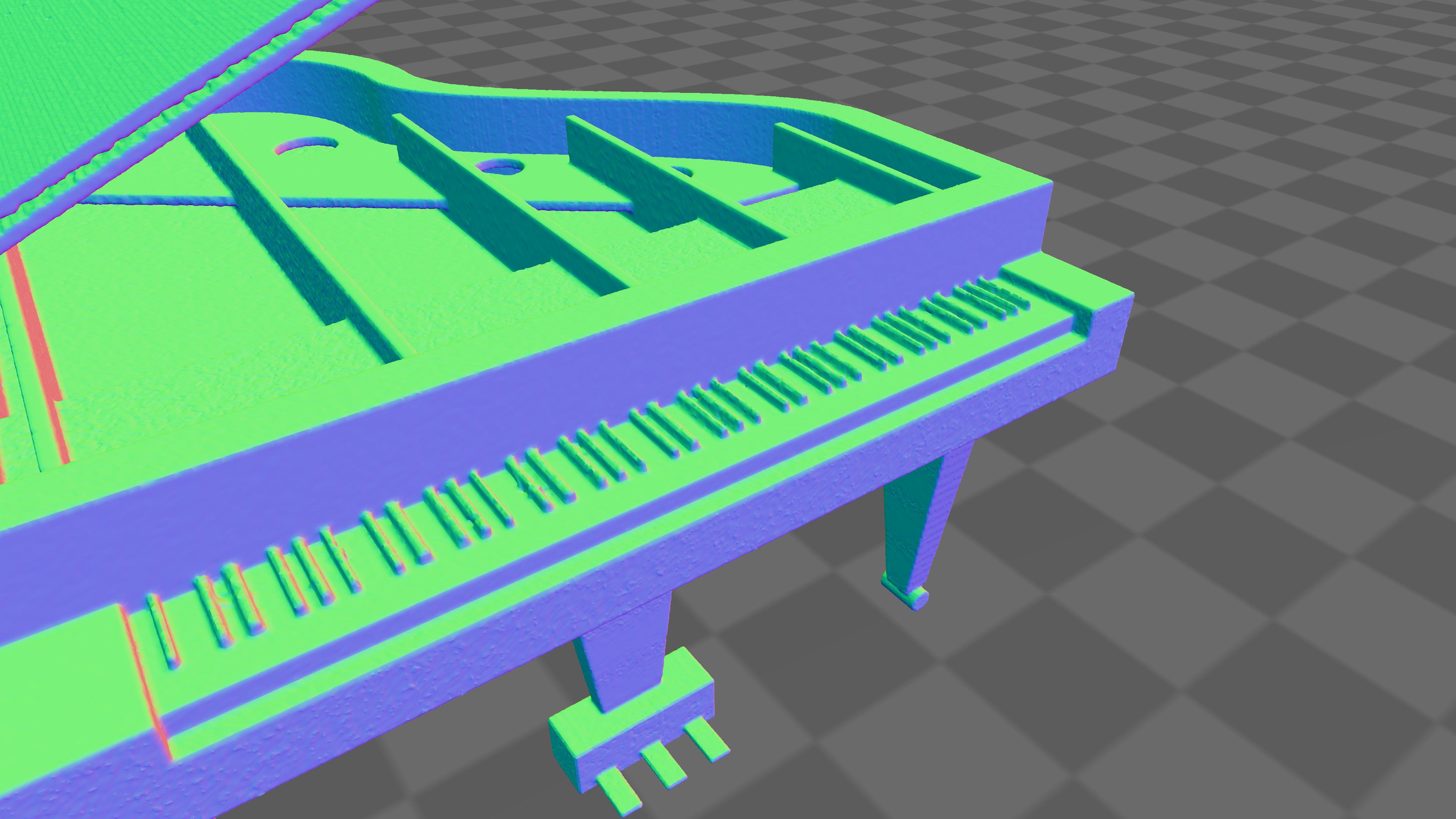

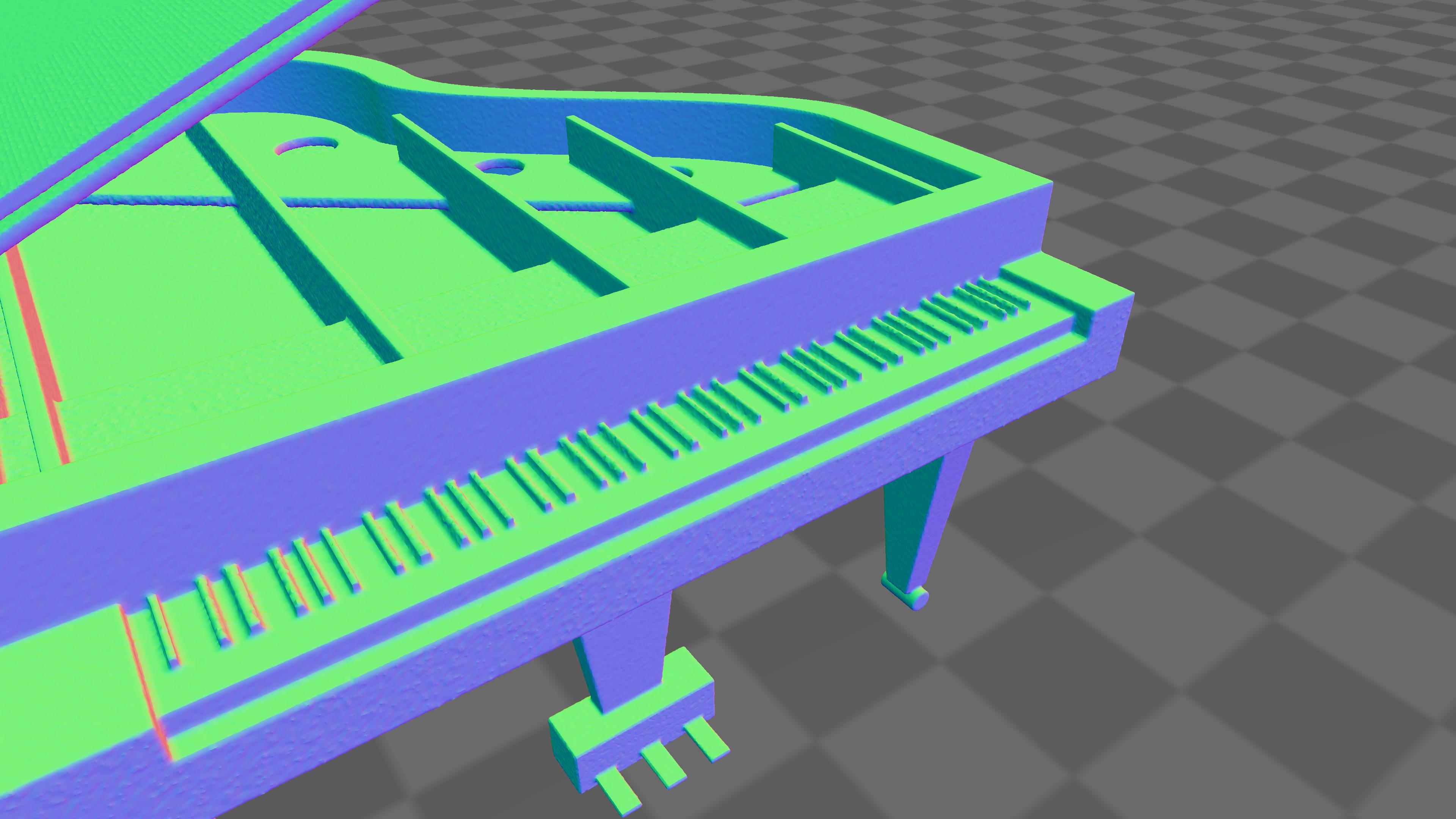

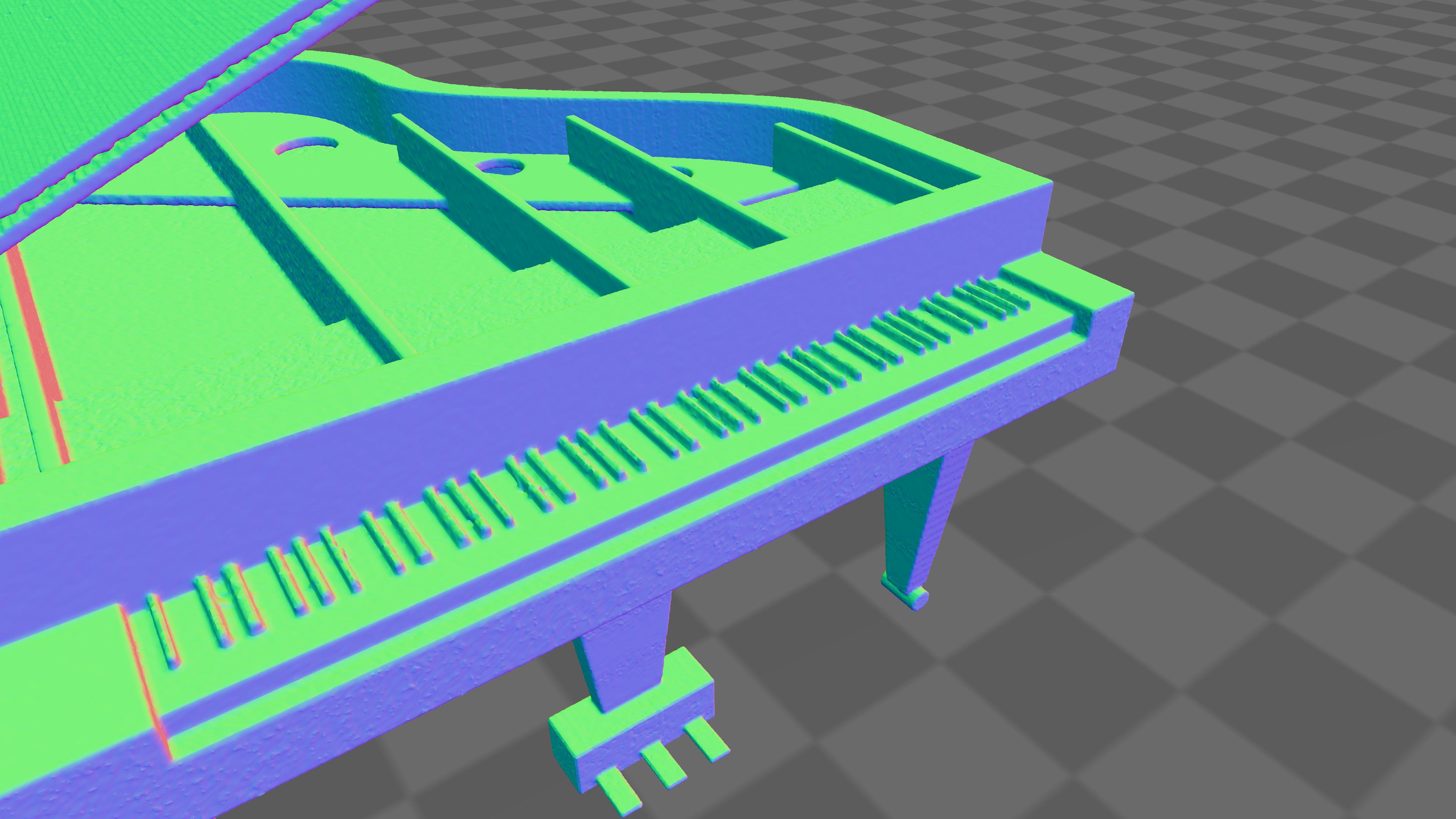

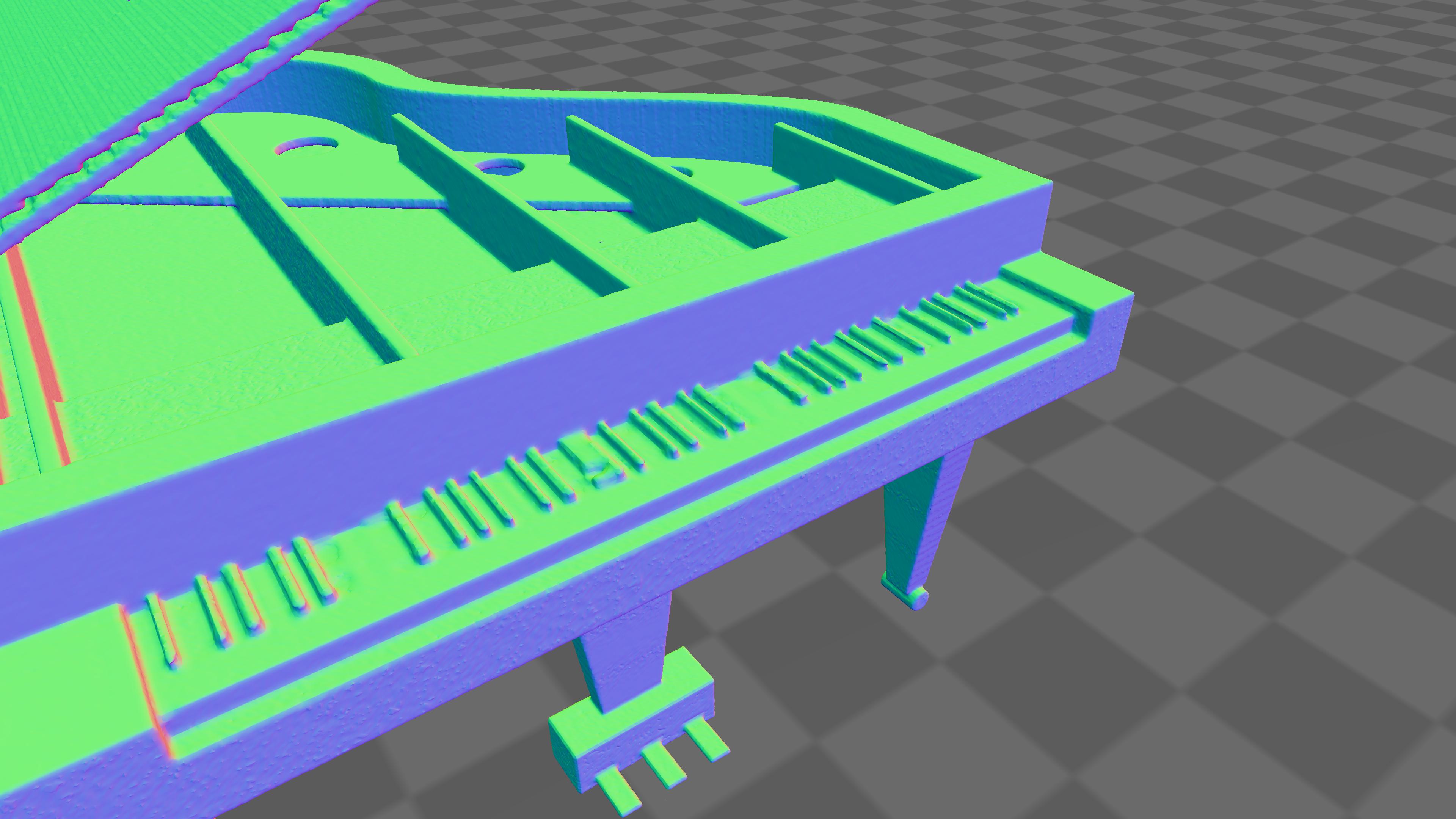

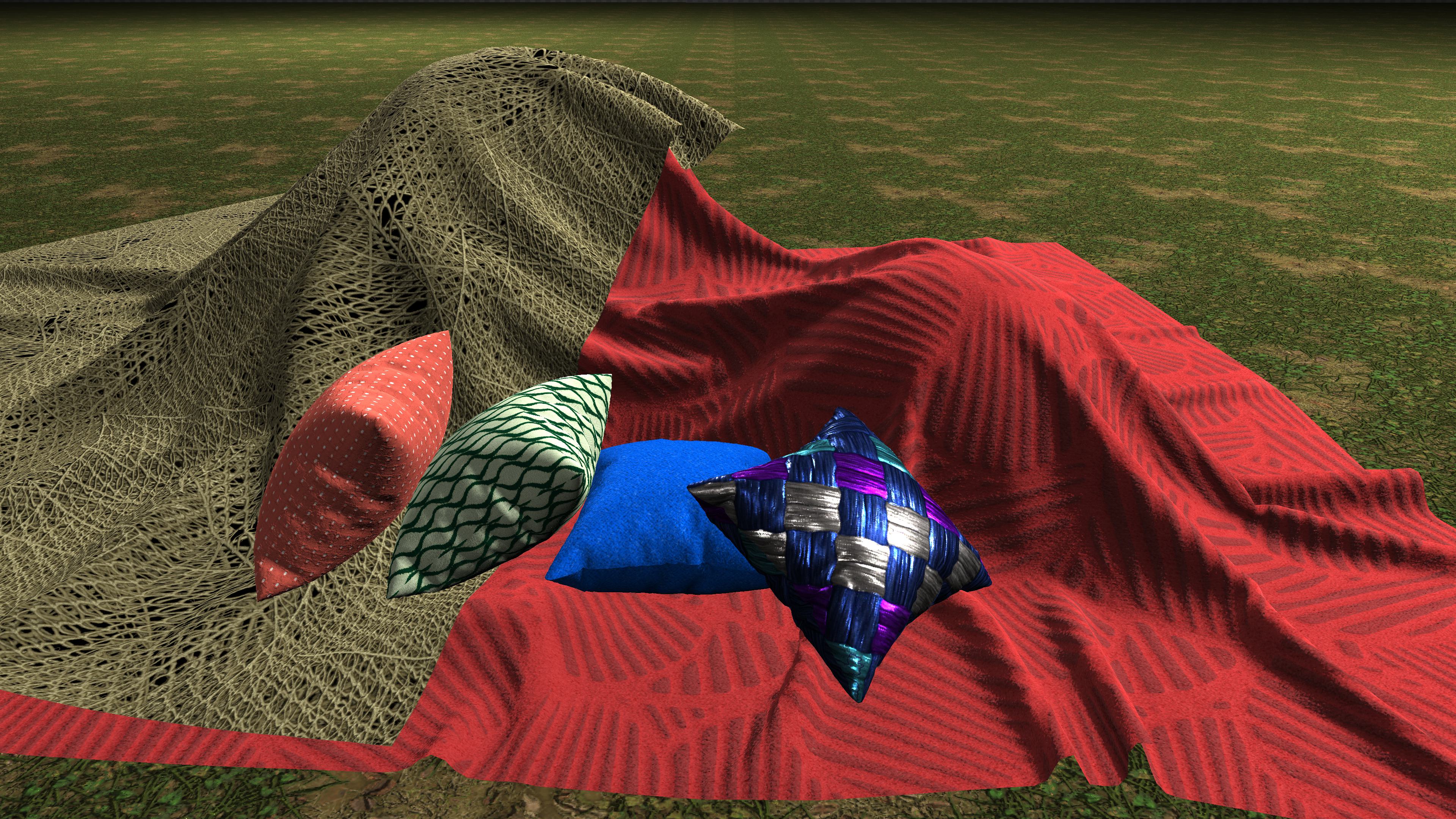

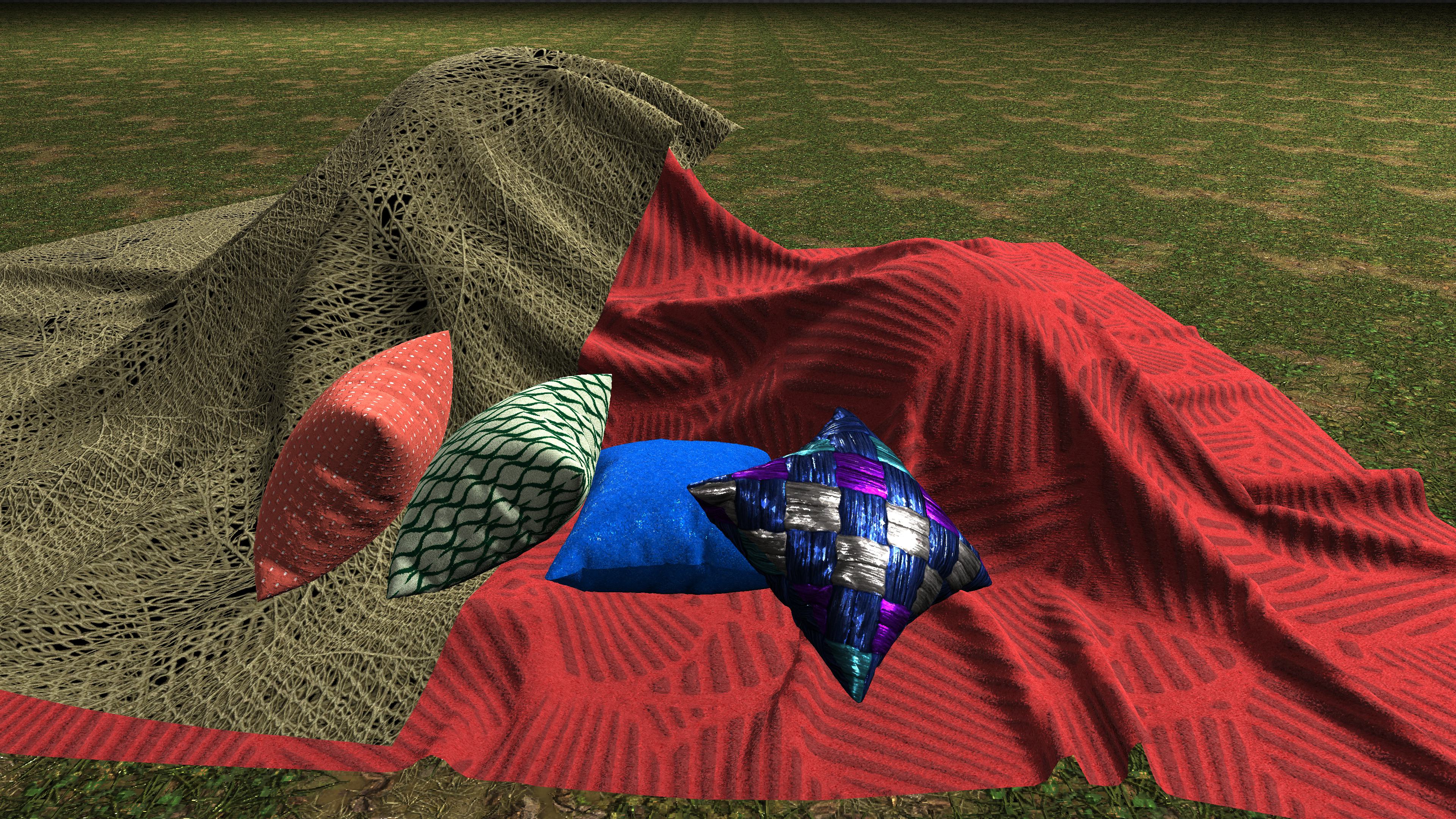

12x texture compression (Albedo) and filtering using differentiable indirection compared with uncompressed anisotropic (16spp) filtered textures. Our network uses pixel footprint information to generate appropriately filtered results.

12x texture compression (Albedo) and filtering using differentiable indirection compared with 12x ASTC compressed nearest-neighbour sampled textures. Our network uses pixel footprint information to generate appropriately filtered results. However, unlike ASTC, our network can simultaneously compress and filter without any additional filtering hardware.

12x normal-mapped texture compression and filtering using differentiable indirection compared with uncompressed anisotropic (16spp) filtered textures. Our network uses pixel footprint information to generate appropriately filtered results.

12x normal-mapped texture compression and filtering using differentiable indirection compared with 12x ASTC compressed nearest-neighbour sampled textures. Our network uses pixel footprint information to generate appropriately filtered results. However, unlike ASTC, our network can simultaneously compress and filter without any additional filtering hardware. Notice ASTC nearest-neighbour samples are much noisier at larger pixel-footprints due to aliasing, but our technique produces better filtered results at similar resource utilization.

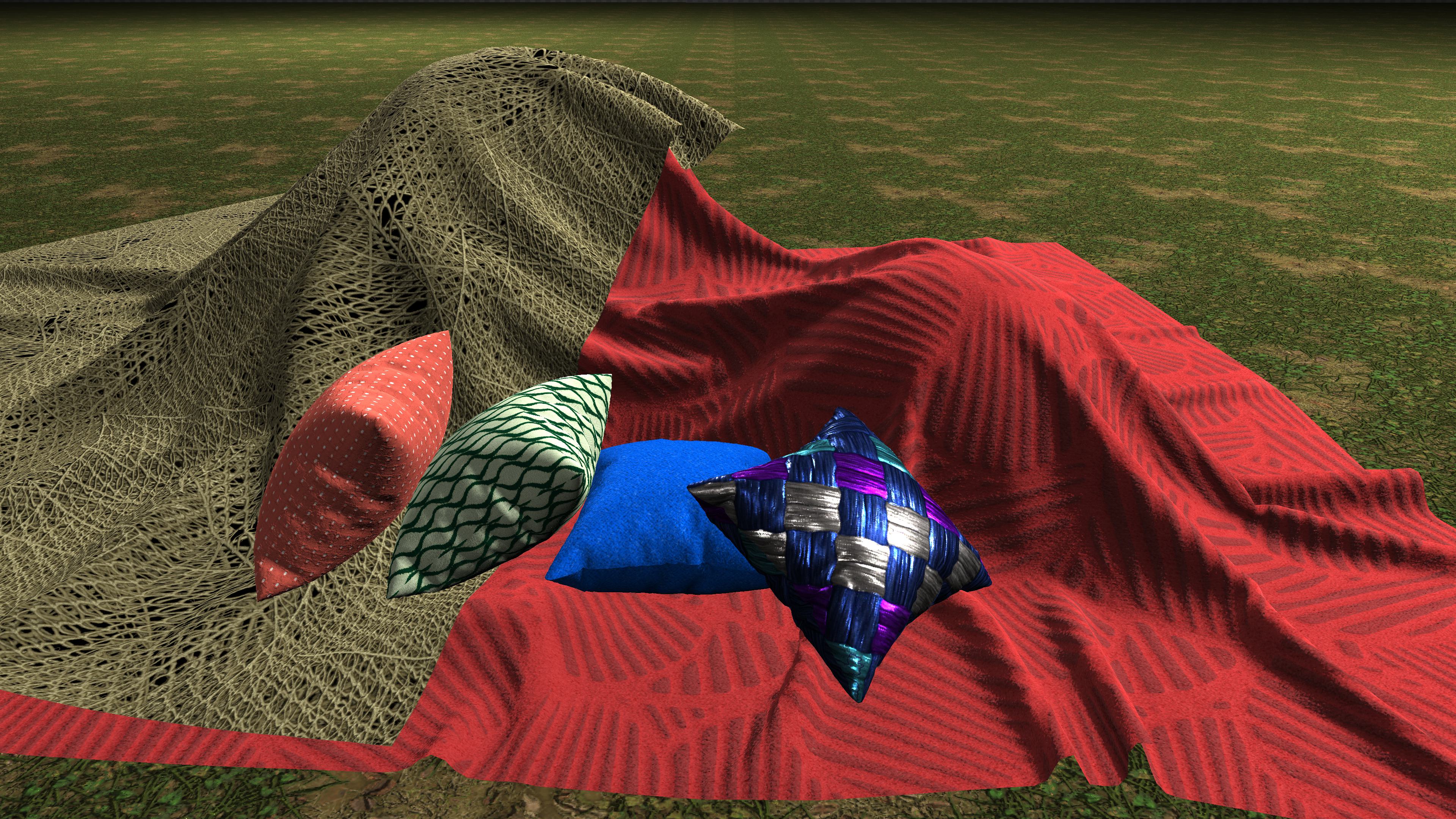

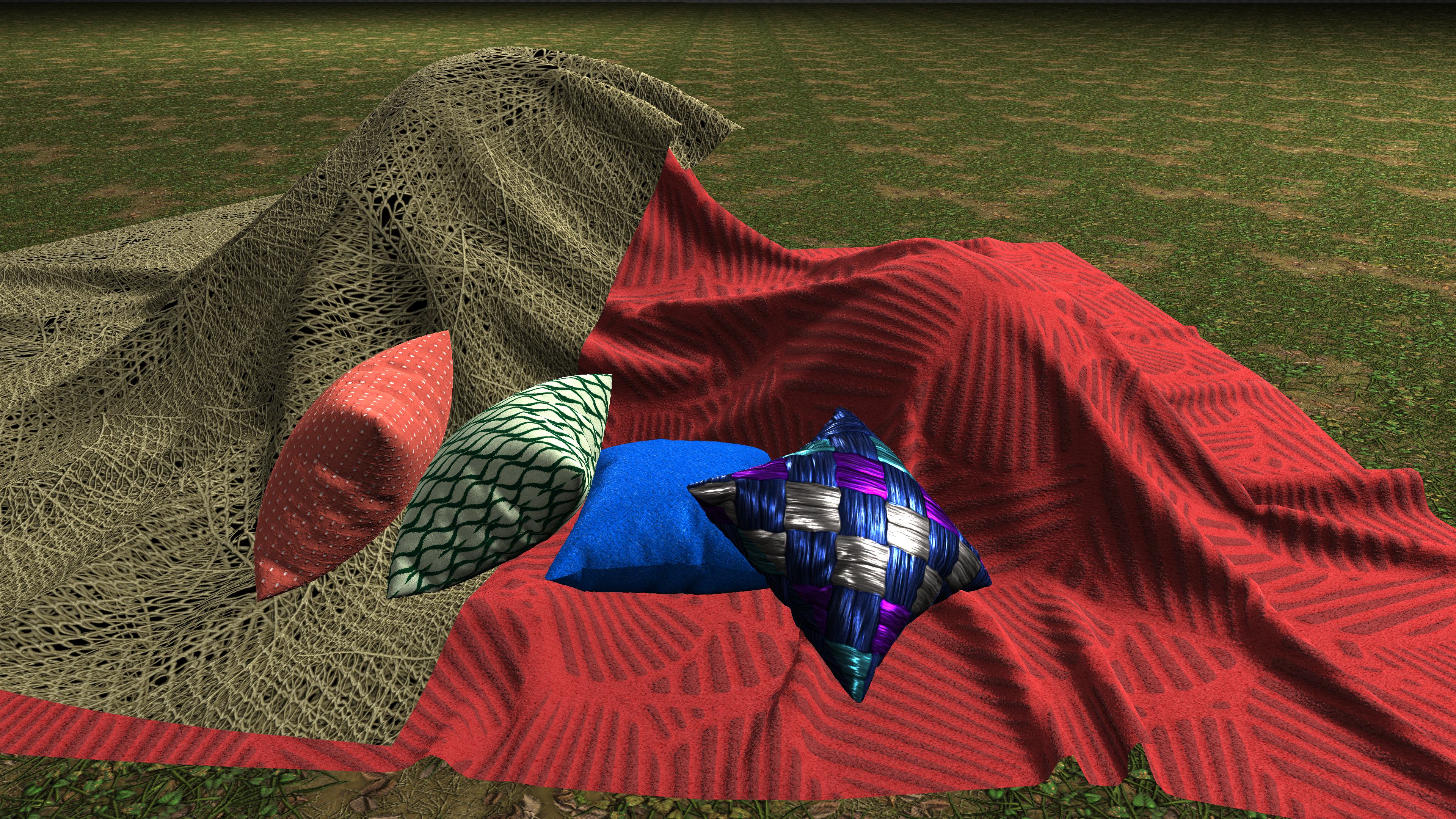

Combined shading (our Disney), and texture filtering (12x compressed albedo and normal maps) using differentiable indirection compared with uncompressd anisotropic textures and analytic Disney shading.

Combined shading (our Disney), and texture filtering (12x compressed albedo and normal maps) using differentiable indirection compared with ASTC nearest-neighbour sampled textures and analytic Disney shading.

Compressed NeRF representation (89 MB) using differentiable indirection compared with uncompressd voxel representation (860 MB) trained using Direct Voxel technique.

Compressed NeRF representation using differentiable indirection compared with MRHE with with 8-Levels (6 Desne + 2 Hash) + MLP (4-hidden layer 16 wide).

Downloads

Paper: differentiableIndirection.pdf

Code: Github

Presentation video: HD (MP4, 154MB) || Captions || Slides

Results video: HQ (MP4, 3.7GB) || LQ (MP4, 500MB) || Captions

Bibtex: din.bib

Acknowledgements

We thank Cheng Chang, Sushant Kondguli, Anton Michels, Warren Hunt, and Abhinav Golas for their valuable input and the reviewers for their constructive feedback. We also thank Moshe Caine for the horse-model with CC-BY-4.0 license, and Adobe Substance-3D for the PBR textures. This work was done when Sayantan was an intern at Meta Reality Labs Research. While at McGill University, he was also supported by a Ph.D. scholarship from the Fonds de recherche du Québec -- nature et technologies.